Spatial Intelligence

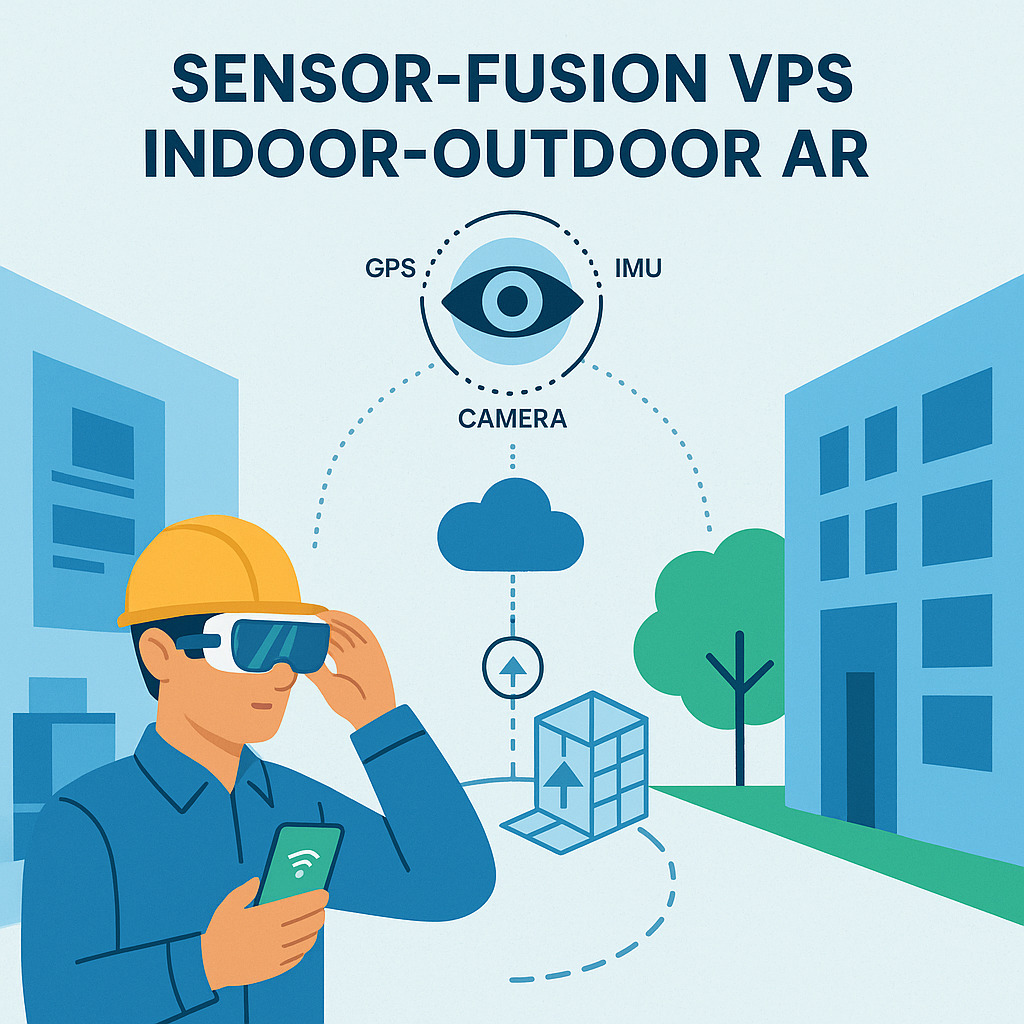

Augmented Reality (AR) applications increasingly demand seamless indoor-outdoor positioning. For enterprises, vision-AI sensor fusion for precise, low-latency indoor-outdoor AR is essential to deliver navigation, inspection, and guidance across large complex sites. MultiSet’s Visual Positioning System (VPS) combines camera imagery with AI and additional sensors (IMU, GPS, etc.) to localize devices with centimeter-level accuracy and minimal drift. The system works seamlessly with multiple types of maps, delivering precise 6 DoF localization even in changing lighting conditions.

In practice, MultiSet fuses live camera frames with learned visual features and optional depth/IMU data, enabling robust tracking through dynamic lighting, clutter, or metal-rich environments. For example, a trained deep network extracts dense image features and semantic context (illumination-invariant cues, edges, textures) so that even if shadows or reflections change, the map matching remains stable. By integrating multiple modalities, MultiSet’s VPS achieves real-time, sub-10cm accuracy across Android, iOS, and XR headsets. As of July 2025, Meta Quest’s passthrough API is supported by MultiSet, letting developers build location-based mixed-reality apps using MultiSet’s sensor fusion tech stack.

Lightweight by design. MultiSet’s localization call transmits only kilobytes of feature data, often smaller than a single smartphone photo - so even on 4G or constrained Wi-Fi the round-trip is negligible. The vision-AI models are pruned and quantized to run on mobile GPUs/NPUs, keeping CPU load and battery drain minimal. In practice, a typical Android device localizes in <2 s and then operates at 60 fps without perceptible heat-up. Self-hosting is an optional deployment model driven by data-sovereignty or regulatory needs - not by performance or cost concerns. Likewise, the fully on-device mode eliminates cloud calls altogether, giving both privacy and zero-connectivity resilience at no extra runtime cost.

The iOS-native approach uses CoreMotion tightly, reducing CPU load by ~40% and dramatically cutting drift across large 10,000 square meter+ areas. In practice this means MultiSet’s on-device fusion uses the device GPU and optimized neural networks for image querying, while ARKit/ARCore handle the rest. The result is lower battery draw and a lighter memory footprint than generic cross-platform solutions. Even on resource-constrained AR devices (Meta Quest, iPhone, Google Pixel), MultiSet balances map detail and inference speed so that AR scenes remain smooth. For example, depth or SLAM data from HoloLens or iPhone Pro can be optionally included: LiDAR-based geometry provides weather-resilient range information and occlusion modeling (critical in low-light or fog), while IMU data smooths motion between frames. Meanwhile the network is trained end-to-end on diverse scenes, so MultiSet networks learn features robust to lighting changes.

MultiSet’s VPS integrates tightly with standard AR frameworks (Unity AR Foundation, ARKit/ARCore) to anchor virtual content. In Unity, developers import the MultiSet SDK and add their 3D models as children of a special “Map Space” GameObject. After localization, MultiSet sets the Map Space’s transform to align with the real-world map, so all child assets appear in the correct locations. For example, a warehouse may load a textured mesh (.glb) of the building and spawn wayfinding arrows or equipment models inside this Map Space. The mesh is used only for content placement and then discarded in the final build.

In ARKit or ARCore on iOS/Android, a similar concept applies: once the VPS returns a device pose, the app creates an ARAnchor or adjusts the ARSessionOrigin so that subsequent AR content sticks to the map’s frame. This preserves persistent anchors: even if the user exits and re-enters the app, as long as the map data is loaded, the AR overlays (e.g. asset information tags, BIM elements) will reappear in the same real-world spots.

MultiSet also supports multi-floor and facility-level transitions. Developers define each level as a separate Map (or MapSet) in the MultiSet cloud portal. The iOS and Unity SDKs can use either a single mapId or a mapSetId to query localization. A MapSet is simply a collection of floor maps for one building. The app can switch floors (for example, using a barometer or UI selector) and then immediately localize against the new floor’s map.

Critically, the Map Space hierarchy holds state: when the Map Space origin shifts to the new map coordinates, the child content stays correctly positioned. In practice, an app might detect a floor change via sensors (or a staircase marker) and then re-run query (form) with the other map’s ID. Because all AR objects remain under the same parent transform, their relative placement is preserved. This approach aligns with AREA’s recommendation for handling floor transitions and map sets. The AREA report notes that designers may combine “barometer or UWB to assist floor-level detection” in multi-story buildings, and that a configuration must allow switching between Map and MapSet.

MultiSet’s VPS also plays nicely with enterprise data workflows. Since each map can be geo-referenced, content (such as CAD/BIM models, asset registers or IoT data) can be synced to the real world. For instance, a factory’s BIM can be aligned to MultiSet’s map coordinates so that virtual piping or machinery drawn in the BIM appears exactly on the physical infrastructure. The AREA interviews highlight this need: solutions should offer “integration with GIS and digital twin data for geospatial alignment”. In this way, AR content and real-world digital twins share a common coordinate frame.

MultiSet’s self-hosted mapping lets companies keep control of these references. The system’s emphasis on content anchors means virtual overlays remain “sticky” where placed. As the AREA report explains, anchors are what “lock digital content to the physical world,” and VPS provides the precise localization needed to persist and find anchors reliably. In multi-user scenarios, anyone who localizes to the same map coordinate frame can see shared AR content aligned together – enabling collaborative use cases like paired technicians viewing the same holographic instructions on a pipeline.

Outcome: In pilot benchmarks, navigation time dropped 27 %, wrong-turns 81 %, and first-pass fix stayed < 6 cm across all zones.

Across all these cases, MultiSet follows AREA’s best practices: it’s been proven in “large, GPS-challenged industrial environments” as a multi-layered spatial strategy, and has already been piloted alongside competing solutions to strike the balance between precision and usability. The result is that users reliably find the exact asset or location even in cluttered yards or dim interiors, dramatically reducing search time. Indeed, an AREA use case study found AR maintenance with a VPS-guided AR app cut wayfinding time by over 80% compared to static signs.

In the emerging VPS market, each vendor has trade-offs. Google’s ARCore Geospatial API offers broad outdoors coverage via Street View imagery, but it only works where Google has mapped and requires an Internet connection – making it unreliable in private plants or tunnels. Niantic’s Lightship VPS (SpatialOS) similarly excels in geolocated outdoor AR (leveraging user-contributed panoramas), yet it is tied to Niantic’s cloud and public map data. In contrast, Immersal (now Hexagon) emphasizes on-premise, scan-based mapping: it allows private indoor scans and offline localization, but it is a paid service with map size limits and slower 2D image matching. Meta’s solution (via Horizon OS and OpenXR Spatial Anchors) is still maturing, and mainly scoped to Meta headsets.

AREA interviews highlight these differences. For example, enterprise users said a self-hosted system like MultiSet is often chosen for indoor applications, whereas a global-scale VPS (Google) might serve outdoors. Importantly, no platform covers all needs. Google lacks fine-grained control and offline modes; others require dense scanning and external processing; and Meta’s anchors cannot yet be easily exported to other ecosystems. By comparison, MultiSet’s technology is device-agnostic and cloud-optional. It ingests any source of scan data (Matterport, Leica, NavVis, phone LiDAR, etc.), and it supports on-device or cloud localization modes. This means companies retain their spatial data (avoiding vendor lock-in), can update maps as assets move, and can let apps fall back to IMU/visual tracking if the map isn’t found. The AREA report specifically notes the value of hybrid and fallback strategies: blending VPS with inertial, markers or UWB, and using manual triggers when needed. MultiSet’s AI-powered fusion and multi-modal design directly address these insights, offering continuity across environments and robust recovery when conditions change.

AREA’s recent Visual Positioning Systems report underscores exactly why MultiSet’s approach is timely. The study found that enterprises need seamless handoff between GPS, VPS, and indoor positioning for “continuous positioning”. It calls for VPS that work “in metal-rich, cluttered, and low-signal areas” — situations where MultiSet’s sensor fusion shines. The report also emphasizes fault-tolerance: fallback options (QR/markers, map selection) should be built in to “regain user confidence” when localization fails.

In practice, MultiSet maps can easily incorporate visual markers for absolute alignment, or allow tapping the floorplan to help re-localize if needed. On the anchoring front, AREA stresses the need for persistent, shareable anchors. It notes that true multi-user AR depends on everyone localizing to a common reference frame (a VPS map or shared anchor). MultiSet maps serve exactly this role: once a team of workers localizes to the same map, they can all see the same virtual content in sync.

Finally, AREA highlights standardization: future VPS platforms should align with open anchor formats (e.g. OGC GeoPose) and support patchable anchors as scenes change. Because MultiSet is self-hostable, it inherently allows enterprises to update or “patch” their maps and anchors (for example, by merging a new scan after equipment is moved). This meets the report’s vision of a “digital reality ecosystem” with granular data control and offline resilience.

In summary, MultiSet’s vision-AI sensor fusion VPS embodies the AREA best practices: it provides precise, persistent localization across indoor/outdoor zones, supports offline and on-device use, and integrates easily with enterprise AR content. By leveraging advanced AI, scalable mapping, and cross-platform SDKs, MultiSet delivers multi-zone continuity and real-time tracking exactly where it’s needed.

Visit MultiSet’s developer docs or contact us to see how vision-AI sensor fusion can power your next indoor-outdoor AR project.

Explore our API documentation or schedule a demo and join companies already reducing navigation errors and downtime with MultiSet’s AI-driven VPS.