LiDAR + Vision Fusion: The Scan-Agnostic Visual Positioning System Behind Enterprise-Scale AR

- Shadnam Khan

- Jun 9, 2025

- 7 min read

Updated: Jul 5, 2025

Enterprises don’t operate in demo labs. Instead, they capture spaces with various tools such as LiDAR carts, drone photogrammetry, 360° cameras, and even iPhones. Often, they rely on multiple techniques within the same facility. A “best AR VPS platform” must effectively digest any scan, localize across several floors, and update only the changing zones. MultiSet AI’s VPS was engineered specifically for this purpose.

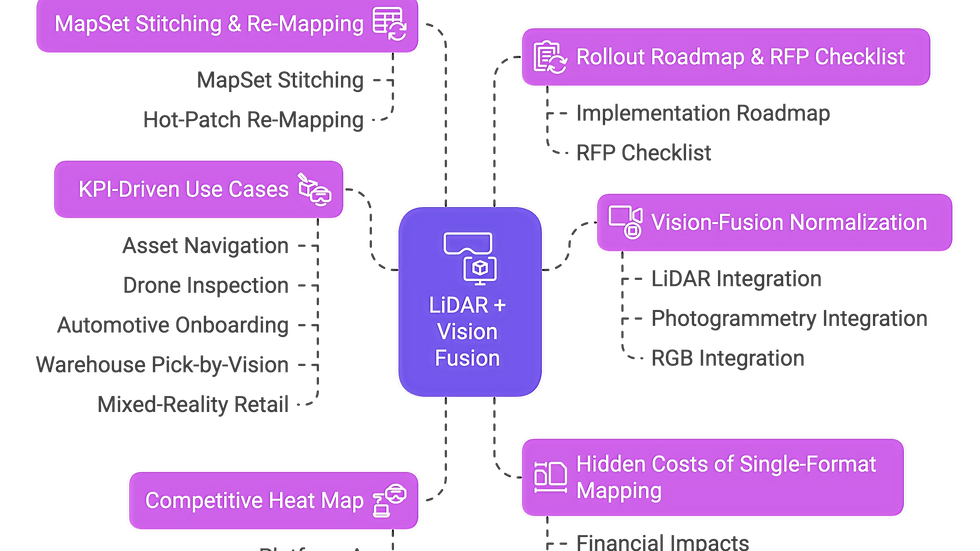

This article unpacks:

The hidden costs of single-format mapping

How Vision-Fusion normalizes LiDAR, photogrammetry, and RGB for centimeter-level indoor AR

MapSet stitching & “hot-patch” re-mapping that prevent the need to reprocess a gigabyte map

Competitive heat map (Platforms A–C) using Poor/Good/Better/Best scores - MultiSet leads on every buyer-critical dimension

Five deep, KPI-driven use cases - asset navigation, drone inspection, automotive onboarding, warehouse pick-by-vision, mixed-reality retail

A zero-lock-in rollout roadmap and RFP checklist you can copy-paste tomorrow

Why Scan Flexibility Beats Demo-Grade Beauty

Walking the expo floor at any AR conference, you’ll likely see the same glossy demo: a well-lit, open room mapped with a single iPhone. Everything appears perfect—until you exit the booth and enter a real facility. In the real world, a single plant can swing from a dim utility tunnel to a sun-blasted loading bay within fifty steps. One area may need a LiDAR cart due to poor lighting, while another requires a drone because production can’t be shut down long enough for traditional equipment. We’ve lost count of projects that begin with, “One neat scan will suffice,” only to end in a mad dash for additional sensors.

The reality is straightforward: enterprise environments typically require a mix of capture methods shortly after implementation. Why is that?

Lighting extremes - Freezer aisles drop below 5 lux, while office atria can exceed 100,000 lux.

Physical access - A drone can reach skylights more quickly than a scissor lift; LiDAR carts navigate under conveyors where GPS signals weaken.

Legacy geometry - Many plants have already invested in OBJ/BIM meshes—why pay again?

Speed vs fidelity trade-offs - High-resolution photogrammetry excels in showrooms, not on a 300 m conveyor.

Regulatory audit trails - Nuclear sites often prefer time-stamped LiDAR since raw depth is easier to certify than stitched images.

Hidden Cost of Single-Format Pipelines

A logistics operator we know tried to budget around fifty grand to map their flagship warehouse using just phone photogrammetry. Everything went smoothly until they reached the freezer aisles. Metal racks in near-darkness refused to register in the scans, forcing them to rent a LiDAR rover at the last minute. Rental fees, extra software licenses, and overtime to redo the meshes ultimately cost them more than sixty grand on top of the initial fifty. By project completion, their total expenditure was over double the original estimate—solely because their chosen platform couldn’t handle anything that wasn’t a bright, texture-rich JPEG sequence. The lesson? Scan flexibility isn’t a luxury feature; it's the primary factor affecting your true cost of ownership once you leave the showroom and enter the factory floor.

LiDAR, Photogrammetry & Friends - Choosing the Right Hammer for Each Nail

Input Type | Strength | Weakness | Best Facility Zones | Technical Note |

LiDAR mapping | Fast capture (1 ha/hour), works in darkness | Noisy edges, large file sizes (2–6 GB raw) | Tunnels, freezer racks | Post-filtering ±2 cm accuracy on commercial scanners |

Photogrammetry | High texture & photorealism, low hardware cost (under $2 k) | Needs good lighting & surface tracking; risk of drift | Fashion retail, office floors | Feature-based bundler/map pipeline |

Gaussian Splatting | Fast offline render, small file sizes (< 50 MB) | GPU-heavy, limited depth | Pop-up events, showrooms | Multi-plane images fused into “3D billboard” |

Point-Cloud Anchors | Precise anchor (< 3 cm) | Needs GPS or geospatial guess | Museums, city landmarks, kiosks | Upload ≤ 100 MB point set per anchor |

Pure RGB SLAM | Universal hardware footprint | ails in glare, darkness, and repetitive textures | Employee ad-hoc mapping | Relies on ORB/FAST keypoints |

A scalable visual positioning system VPS must consider these points as “observation classes” and fuse them.

Vision-Fusion Visual Positioning System: A Scan-Agnostic Core

MultiSet AI’s architecture is designed around three core principles:

Feature Extractors: They don't differentiate between data from a $150k scanner or an iPhone depth stream.

Consensus-Based Posing: A pose is generated only when consensus exceeds a dynamic threshold. No single eyewitness can validate a pose; it requires unanimous agreement.

Drift Correction: A proprietary process corrects residual drift (< 20 mm) within 250 ms—imperceptible to users.

Enterprise KPIs

An independent audit from the Augmented Reality Enterprise Alliance tested and ranked VPS vendors based on five enterprise KPIs: Environmental Resistance, Indoor Performance, Dynamic Handling, Update Cost, Free-Trial Availability.

KPI | MultiSet AI | Summary Verdict |

Environmental resistance | Best | Works under high-lux glare or low-lux basements without extra maps |

Indoor performance | Best | Centimeter accuracy across a 250 m walk |

Dynamic environment handling | Better–Best | Responds well to moving forklifts; minor jitter auto-corrected |

Update cost | Best | Hot-patch tile < 30 MB; devices auto-pulling |

Free trial | Better | Offers 10 maps for free per user |

Competitor platforms A–C struggled with at least one KPI drop to Fair or Poor. This illustrates the distinction a scan-agnostic core brings.

MapSet: Turning Scattered Scans into One Spatial Canvas

Most VPS providers say, “Upload your mesh. Wait an hour.” That approach may work unless your mesh hits 4 GB and your freezer area remains unmapped.

MapSet rewrites the playbook:

Tiles before ties – Divide large venues into 50 × 50 m (or floor-based) segments.

Stitch once – Relative transformations yield a single origin; devices can navigate freely.

Optional overlap – While overlap speeds optimization, it’s not mandatory. A walk/click merge is achievable in non-overlapping data sets.

Permission scopes – Each tile inherits or overrides authentication tokens; AR overlay in a specific storage unit displays only if users possess the necessary access.

Real-World Metrics

Hand-off latency: 120–200 ms median on Android; faster on iOS.

Tile size: 15–25 MB compressed (LiDAR + photo + others).

Max tested MapSet: 180 tiles across four floors maintaining 55 fps in the Unity app.

Hot-Patch Re-Mapping Saves Money

For a 1.2 million ft² property, a full facility reprocessing typically requires 3–4 hours of GPU time and 80 GB of data transfer. With hot-patching 12 tiles, the requirement drops to 400 MB and < 20 minutes. At $200/hour for GPU time and $0.12/GB for egress, costs decrease from $780 to under $20.

AR deployments can finally find affordability beyond pilot projects.

Competitive Heat Map: How Scan Flexibility Tilts the Field

Capability | MultiSet AI | Platform A | Platform B | Platform C |

Scan-agnostic ingest (LiDAR + photo + OBJ) | Best | Poor | Fair | Poor |

Lighting robustness (glare, < 5 lux) | Best | Good | Fair | Fair |

Dynamic handling | Better | Good | Fair | Fair |

Multi-floor stitching | Best | Poor | Poor | Fair |

Hot-patch tile updates | Best | Fair | Poor | Poor |

Note: Scores reflect public docs, user forums, and Augmented Reality Enterprise Alliance data; they denote relative enterprise fitness, not hobby AR usage.

Use-Case Deep Dives

Here are four real-world stories showcasing how scan-agnostic Vision-Fusion and MapSet hot-patching outperform single-format VPS tools. Click a case that aligns with your environment:

Asset Navigation in a Gas-Turbine Plant

Capture: Two LiDAR iPads mapped turbine bays B1–B7 (approximately 15 minutes each). A DJI M300 drone captured 320 images around exhaust stacks through a 7-minute photogrammetry flight. MapSet merged 14 tiles.

Deployment: Technicians wear IP-rated Android tablets, showing “You Are Here” arrows to the nearest valve.

KPIs: Mean time to locate valves reduced by 18% from 5:11 to 4:12 minutes. Average shift walk distance cut by 1 km, saving approximately 130 calories and, more crucially, 13 minutes of labor.

Differentiator: LiDAR noise inside 500 °C combustion jackets and photogrammetry glare outside merged seamlessly.

Automotive Assembly Onboarding

Capture: An overnight LiDAR cart run (70 GB raw) covered 1.4 km of the assembly line. OBJs were exported from CATIA for fixtures, with tiles created every 25 m.

AR Module: Animated arrows direct workers to torque stations, overlaying specifications on bolt faces.

New-hire KPI: Time to achieve 90% first-time torque accuracy dropped from eight to five shifts. Supervisor time saved 63 hours per week across two shifts.

Seasonal Warehouse Pick-by-Vision

Capture: Four crews divided a 1.2 million ft² distribution center into 100 tiles, utilizing high-bay photogrammetry (good light) and LiDAR in low-light freezer lanes. Capture was completed in 1.5 days compared to four last year.

Seasonal change: SKUs advanced from Aisle 14 to Aisle 30, allowing for quick rescans of just those two 50 × 50 m tiles to update maps.

KPIs: Picking efficiency increased by 24% and mis-picks decreased by 36%.

Mixed-Reality Retail Pop-Up

Capture: Staff utilized an iPhone 14 Pro (LiDAR/RGB) for a two-pass scan around an 80 m² store over 10 minutes.

Metrics: AR dwell time increased to 2:07 versus 0:54 for a web kiosk. Conversion rates surged by 17% compared to non-AR stores.

Feature Checklist: Copy-Paste for Your RFP

Must-Have for 2025-2027 | Why It Matters | MultiSet AI | Platforms A-C |

True scan agnosticism | Data-prep cost predictability | Yes | Mixed |

Multi-floor hand-off | Elevators, stairwells, and mezzanines | Yes | Rare |

Hot-patch re-mapping | No extensive rebuilds required | Yes | Rare |

Open exports | Digital-twin migrations | Yes | Mixed |

Free pilot tier | Proof of Concept without procurement | Yes | Rare |

Print it, give vendors a highlighter, and watch them squirm.

Roll-Out Roadmap: Zero Silo, Zero Regret

Scoping (Day 0)

Audit zones and choose capture technology.

Pro tip: Begin with LiDAR for dark areas and photogrammetry for optimized surroundings.

Pilot Scan (Day 1)

Capture 2–3 tiles with MultiSet Capture App on iPad Pro (LiDAR) and a DJI drone.

Process & Merge (Days 2–3)

Upload tiles, auto-generation follows.

MapSet UI provides transformation preview for necessary adjustments.

Pioneer Users (Week 1)

Deploy AR modules to 5–10 devices while monitoring drift.

Scale-out (Weeks 1-3)

Capture remaining zones simultaneously; five crews can cover >1M ft² in a week.

Operationalization (Months 1-3)

Incorporate additional areas such as freezers and mezzanines, managing re-slotting effectively.

AI & Robotics Integration (Quarter 1+)

Enhance capabilities by exposing MapSet graphs via GraphQL for AGV fleet localization.

Vendor-Lock-Out Plan (Whenever)

Export OBJ meshes and JSON pose graphs, assured of data retention even amid strategy pivots.

Competitive Research Insights - Extra Differentiators

Cross-license headsets and phones: One license covers multiple device types.

On-prem inference option: MultiSet VPS can operate on-premises or in private clouds, a rarity among vendors.

Continuous relocalization: Regular relocalization attempts every 5 seconds, identifying silent drift issues early.

Depth fallback in texture voids: Automatically switches to depth-only systems when required.

These differences may seem minor, but they can differentiate between toy-like prototypes and robust, production-ready infrastructures within regulated or critical timing operations.

Conclusion: Centimeter AR That Adapts as Fast as Your Floor Plan

The AR market’s flashiest demos fall short under freezer-lane darkness or chaotic work environments. The solution isn’t more marketing; it’s a scan-agnostic, drift-resilient, patch-friendly visual positioning system VPS.

MultiSet AI’s Vision-Fusion:

Accepts various scanning methods, ensuring adaptability across diverse environments.

Provides centimeter accuracy under various lighting conditions without losing fidelity.

Efficiencies in multi-floor mapping with swift transitions.

Promises easy management and expedited updates for dynamic environments.

Ready to test without waiting for procurement? The free tier allows you to experiment with 10 maps and 10,000 localizations—a simple way to see how effective AR can be in your operation.

Comments